Edit April 20th, 2021: thanks to Christos Petrou I found a bug in my code. I was considering both “Section” and “Collection” articles as Speical Issue. The whole analysis has been changed to accommodate the new data. I also acknowledged in the text the arguments of Volker Beckmann, who develops a coherent defense of MDPI practices and disagrees with my overall take; and inserted references to what MDPI (and traditional publishers) are doing for the Global South inline at the end of the piece, thanks to input from Mister Sew, Ethiopia.

This post is about MDPI, the Multidisciplinary Digital Publishing Institute, an Open-Access only scientific publisher.

The post aims to answer the question in the title: “Is MDPI a predatory publisher?” with some data I scraped from the MDPI website, and some personal opinions.

Tl;dr: main message

So, is MDPI predatory or not? I think it has elements of both. I would name their methods aggressive rent extracting, rather than predatory. And I also think that their current methods & growth rate are likely to make them shift towards more predatory over time.

MDPI publishes good papers in good journals, but it also employs some strategies that are proper to predatory publishers. I think that the success of MDPI in recent years is due to the creative combination of these two apparently contradicting strategy. One — the good journals with high quality — creates a rent that the other — spamming hundreds of colleagues to solicit papers, an astonishing increase in Special Issues, publishing papers as fast as possible — exploits.This strategy makes a lot of sense for MDPI, who shows strong growth rates and is en route to become the largest open access publisher in the world. But I don’t think it is a sustainable strategy. It suffers from basic collective action problems, that might deal a lot of damage to MDPI first, and, most importantly, to scientific publishing in general.

So that’s the punchline. Care to see where it stems from? In the following I will

- focus on the terms of the problem;

- develop an argument as to how the MDPI model works;

- try to give some elements as to why the model was so successful;

- explain why I think the model is not sustainable and is bound to get worse over time.

I’ll do so using some intuitions from social dilemmas and econ 101, a handful of personal ideas, and scraped data from MDPI’s website. The data cover the 74 MDPI journal that have an Impact Factor. They represent about 90% of all MDPI published articles in 2020 (somewhat less for the previous years, as MDPI growth has concentrated in their bigger journals. You find the data & scripts to reproduce the analysis in the dedicated github page.

Ready? Let’s go.

The problem

Scientists are by and large puzzled by MDPI.

On the one hand, MDPI publishes journals with high impact factor (18 journals have an IF higher than 4) many of which are indexed in Web of Science. Many, if not most papers are good. Several distinguished colleagues in nearly all fields served as Guest Editors or as Editors for their journals, often reporting positive assessments. MDPI is Open Access, so it does not contribute to the very lucrative rent-extraction at the base of Elsevier & other traditional publishers. MDPI’s editing is fast, reliable, professional; publication on the website is swift, efficient and smooth — all things that are hard to say of other, traditional, publishers. Several MDPI journals are included in the rankings used by different states to evaluate research and grant promotions to academics, for instance Sustainability is “classe A”, the highest possible rank, in Italy (source: ANVUR).

On the other hand, MDPI is known for aggressively spamming academics to edit special issues, often in fields that are far away from the expertise of the recipient of the frequent and insisting emails. Twitter is full of colleagues complaining that they get several invitations per week to contribute to journals they didn’t know existed and that lie outside of their domains, for instance here, here or here. MDPI even asked Jeffrey Beall, the author of Beall’s list of predatory publishers, to edit a Special Issue in a field that is not his own. It gets further than annoying emails, though. In 2018 the whole editorial board of Nutrients, one of the most prestigious MDPI journals, resigned en-masse lamenting pressures from the publisher to lower the quality bar and let in more papers.

This duality has generated some debates in several different places, among others in two posts by Dan Brockington here and here, in a post on the ideas4sustainability blog by Joern Fischer, and in the scholarly kitchen blog.

A predatory publisher is a journal that would publish anything — usually in return for money. MDPI rejection rates make this argument hard to sustain. Yet, MDPI is using some of the same techniques of predatory journals. So the question is simple: if you are a scientist, should you work with MDPI? Submit your paper? Review? (Guest) edit for them? Is MDPI predatory?

MDPI’s growth: how?

MDPI has had an impressive growth rate in the last years. It went from publishing 36 thousand articles in 2017 to 167 thousands in 2020. MDPI follows the APC publishing model, whereby accepted articles have to pay an Article Processing Charge (APC) before they are published. The APC has increased over time at MDPI. It can go up to more than 2000 CHF — MDPI is based in Switzerland — but there are several waivers and discounts. MDPI reports the average APC per article in 2020 amounted to 1180 €. Calculations by Dan Brockington show their revenue increasing from 14 mln $ in 2015 to 191 mln $ in 2020.

To know more about them, see their Annual Report 2020.

How did MDPI reach such high levels of growth? By cleverly exploiting the publish or perish policy widespread in academia, the fact that several countries mandate or suggest Open Access publications, and the rise of formal requirements for tenure and promotion within academia. But this state of affairs is independent of MDPI and anyone could have profited from it, though didn’t. So how?

As far as I can see, the success of MDPI relies on two key pillars: a lot of special issues and a very fast turnaround.

An explosion of Special Issues

Traditional journals have a fixed number of issues per year — say, 12 — and then a low to very low number of special issues, that can cover a particular topic, celebrate the work of an important scholar, or collect papers from conferences. MDPI journals tend to follow the same model, only that the number of special issues has increased in time, to the point of equaling, surpassing, and finally dwarfing the number of normal issues. Moreover, special issues are usually proposed by a group of scientists to the editors of the journal, who accept or reject the offer. At MDPI, it is the publisher who sends out invitations for Special Issue, and it is unclear which role, if any, the editorial board of the normal issues has in the process.

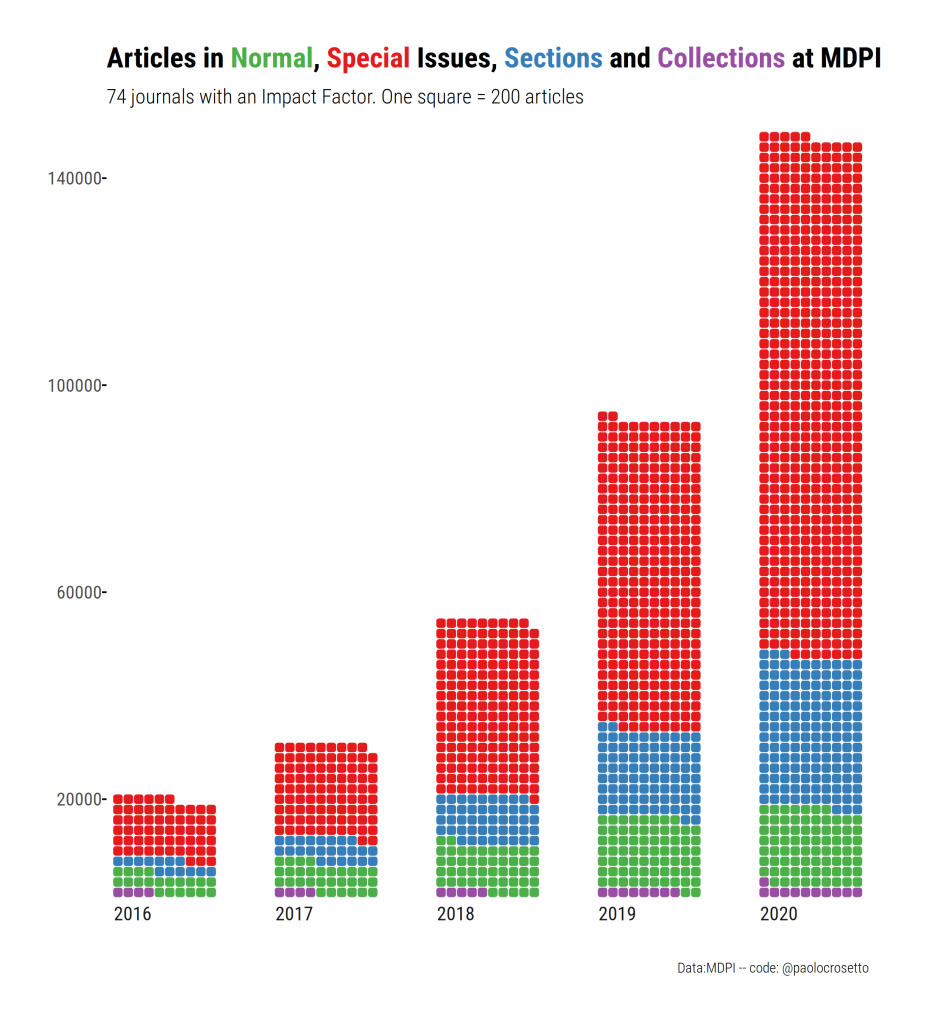

Virtually all of MDPI’s growth in the last years can be traced back to Special Issues.

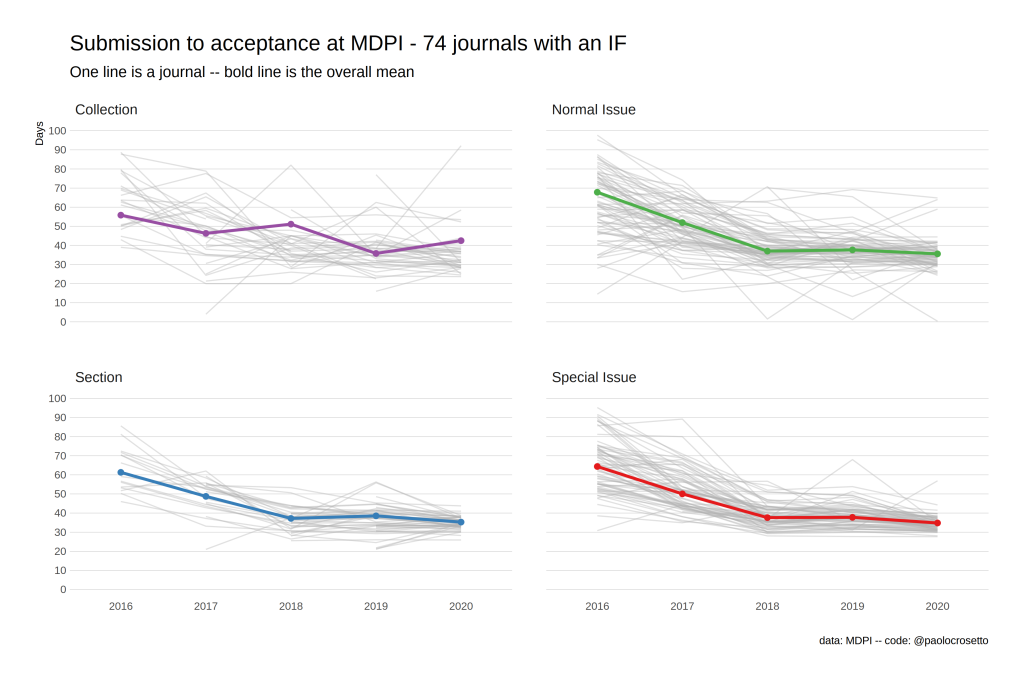

The figure below shows the growth in articles for 74 journals with an IF at MDPI, dividing them between articles published in normal issues, special issues, collections and sections. Sections are a way to create several distinct branches of a sigle journal. Collections seem more similar to special issues, since they have their own collection editor. Special issues covered already the majority of papers in 2017 (it was not so earlier on, but I have article data from 2017 only), but grew rapidly from then on. While the number of normal issue articles increased 2.6 times between 2016 and 2020, the number of SI articles increased 7.5 times. At the same time, the number of articles in Sections increased 9.6 times, while Collections increased by 1.4 times. Articles in SI now account for 68.3% of all articles published in these 74 journals.

MDPI journals are becoming more differentiated, through the use of Sections, and they rely more and more on special issues.

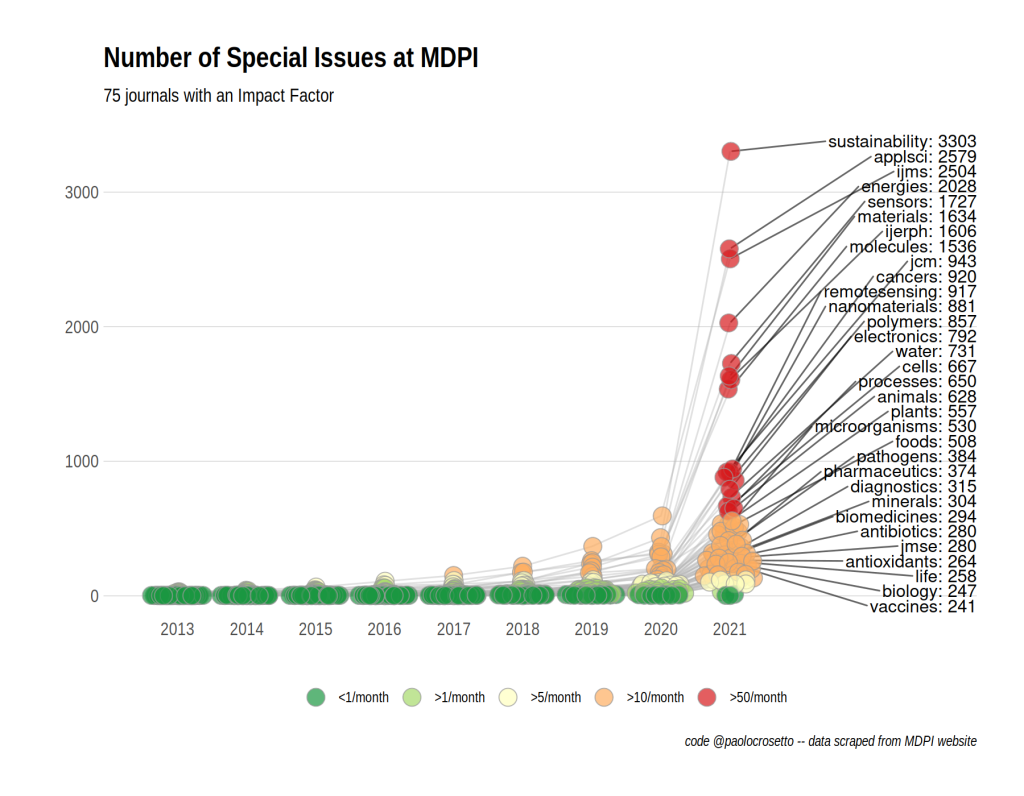

The explosive SI growth is reflected also in the number of special issues, overall (table) and by journal (figure).

| 2013 | 2014 | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021* |

| 388 | 475 | 710 | 990 | 1386 | 2342 | 4096 | 6756 | 39587 |

Across the 74 journals, there were 388 Special Issues in 2013, about five per journal. In 2020, there were 6756 SIs, somewhat less than a hundred per journal. The provisional data for march 2021 counts 39687 SIs that are open and awaiting papers — about 500 per journal. Not all of them will go through — many will fail to attract papers, others will be abandoned by the Guest Editors — but in all likelihood SIs in 2021 will be much more numerous than in 2020.

SIs increase at all journals, in some cases exponentially. Some have unbelievably high number of SIs. In March 2021, Sustainability had 3303 open Special Issues (compared to 24 normal issues). These are 9 SIs per day, just for Sustainability. 32 MDPI journals have more than 1 open SI in 2021 per day, including Saturday and Sundays.

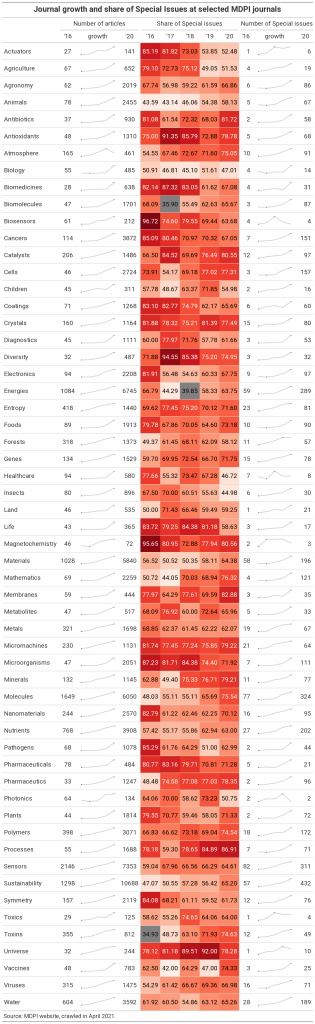

The “Journal Growth” table in the data appendix at the end of this post reports the growth of articles and number of SIs for each MDPI journal that published at least 100 articles in 2020. It also shows the share of articles that appear in SI rather than in the normal issues. This share has followed different paths in different journals, mainly because of the rise of Sections and Collections, but is still very high for virtually all journals.

Are Special Issues a problem?

SIs are good because they pack together similar articles, increasing the readability of an ever-growing literature. They can contribute to the birth or growth of research teams, consolidate networks or help build new ones, and be a place where to carry out interdisciplinary research, that is often squeezed out of traditional disciplinary journals.

But they ought to be special, as the name says, and they ought to be under the control of the original editorial boards. In most (if not all) non-MDPI journals, the SIs are managed by the journal’s editorial board, together with the guest editors. Not so at MDPI. It is the publisher that sends out the invites (often, mass-sending them without much regard to the appropriateness of the invitations). This, coupled with the exponential explosion of SIs, marginalises the editors of the original journal. The people that created the reputation of the journal in the first place are sidestepped by an army of MDPI-invited Guest Editors.

While I will discuss later the implications of the SI model adopted by MDPI, I think the data prove beyond doubt that the most important MDPI journals are turning into collection of sometimes loosely related Special Issues at an accelerated pace. Normal issues are disappearing.

A coordinated reduction of turnaround times

Traditional publishers can be extremely sluggish in their turnaround. Scientists share horror stories about papers stuck in review for years. The situation is particularly bad in some fields (economics: I’m looking at you) but it is generally less than optimal.

MDPI prides itself on its very fast turnaround times. In the Annual Report 2020 MDPI reports an average time to a first decision of 20 days. This is extremely fast. A paper after submission must be assigned to an editor; this editor has to find an associate editor (or not), and then find referees. It is hard to find the correct people to review a paper, and these might be not available. Once the referees have been found and have accepted, they need time to make their report. Then the editor has to read the reports and make a decision. 20 days is really fast.

But MDPI does not provide aggregate statistics on the time from submission to acceptance. This includes revisions, and is crucial to understand how the editorial process works. To get this data, I scraped MDPI’s website. The information is public — for each paper, we know the submission date, the date when the revised version was received, and the acceptance date. The aggregate results are shown in the figure below. Three main takeaways: 1. there is not much difference between normal, special issues, sections and collections. 2. MDPI managed to halve the turnaround times from 2016 to 2020. 3. the variance across journals has gone down at the same time as the mean.

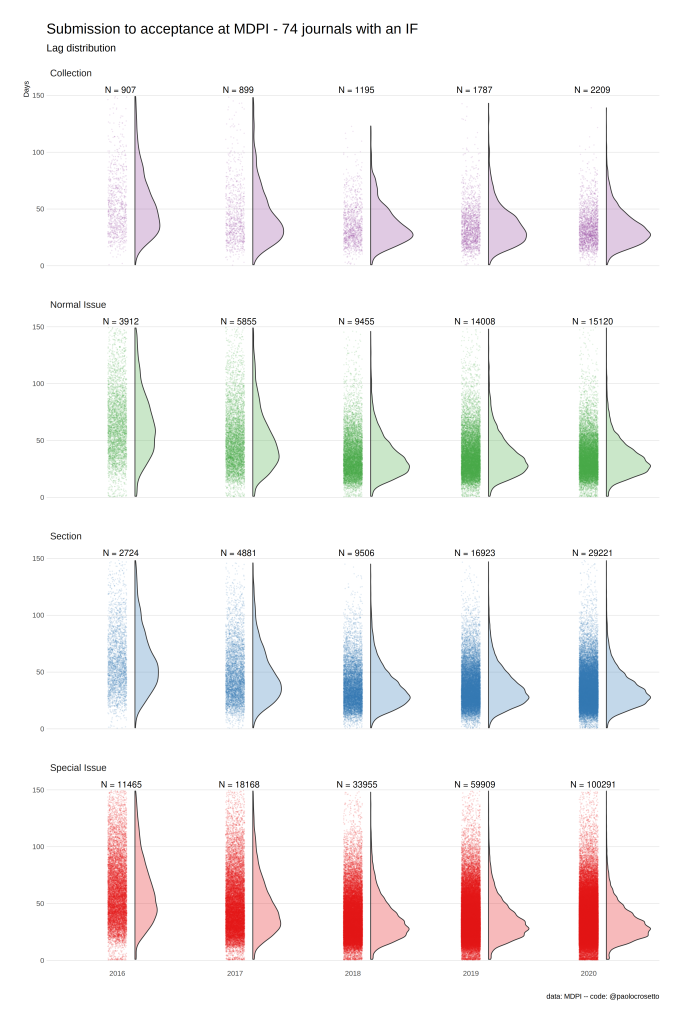

But these are just means. Surely there is a lot of heterogeneity in the turnaround, and some papers will take their time. There could be hidden heterogeneity also by field — economists have shown to have different reviewing times and practices than, say, virologists. Let’s have a look.

Below is the raincloud plot of the overall distribution (cut at 150 days, for the sake of visualisation. This leaves out about 3% of the papers in 2016, but, a further indication of the shrinking of turnaround times, only 0.3% of papers in 2020). On the left, each point is a paper. On the right, you see the kernel density estimation. There is heterogeneity, but it is rather low, and it is being dramatically reduced. The rather flat distribution of 2016 has been replaced by a very concentrated distribution in 2020. The distributions for normal and special issues are similar, with some more variance for SI articles.

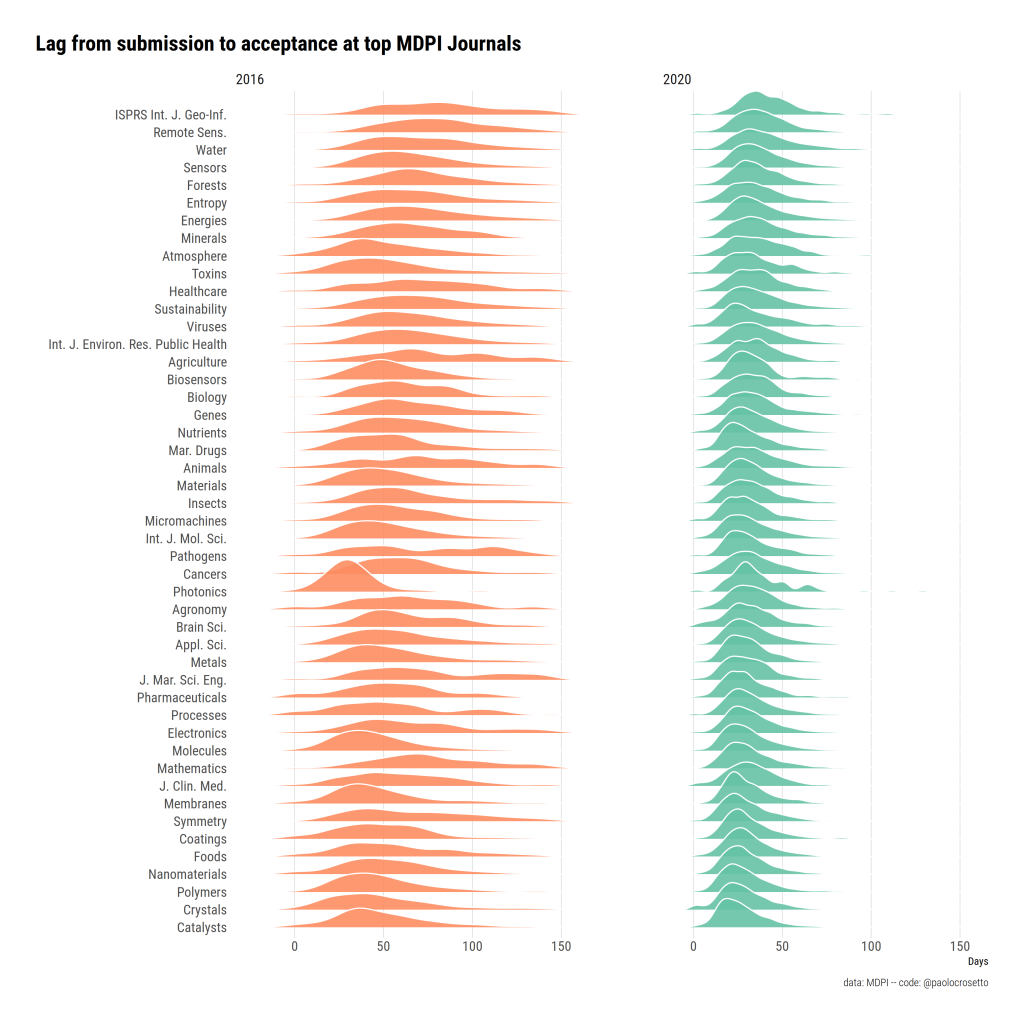

Are there differences by journal, or field? After all we are talking here of different people, from different fields, and thinking back to the SI explosion, at an army of heterogeneous and uncoordinated guest editors. Below you find the distribution of turnaround times for the main MDPI journals (cut to 150 days).

The really striking finding here is that there is virtually no heterogeneity left. The picture from 2016 is as you’d expect: fields differ, journals differ. Some journals have faster, other slower turnaround times. Distributions are quite flat, and anything can happen, from a very fast acceptance to a very long time spent in review. Fast forward to 2020, and it is a very different world. Each and every journal’s turnaround times’ distribution shrinks, and hits an upper bound around 60 days. The mean is similar everywhere, the variance not far off.

This convergence cannot happen without strong, effective coordination. The data unambiguously suggest that MDPI was very effective at imposing fast turnaround times for every one of their leading journals. And this despite the fact that given the Special Issue model, the vast majority of papers are edited by a heterogeneous set of hundreds of different guest editors, which should increase heterogeneity.

So: hundreds of different editorial teams, on dozens of different topics, and one common feature: about 35 days from submission to acceptance, including revisions. The fact that revisions are included makes the result even more striking. I am surely slow, but for none of my papers I would have been able to read and understand the reports, run additional analyses, read further papers, change the wording and/or the tables, and resubmit within one week of receiving the reports — unless revisions were really minor. Can revision be minor for so many papers?

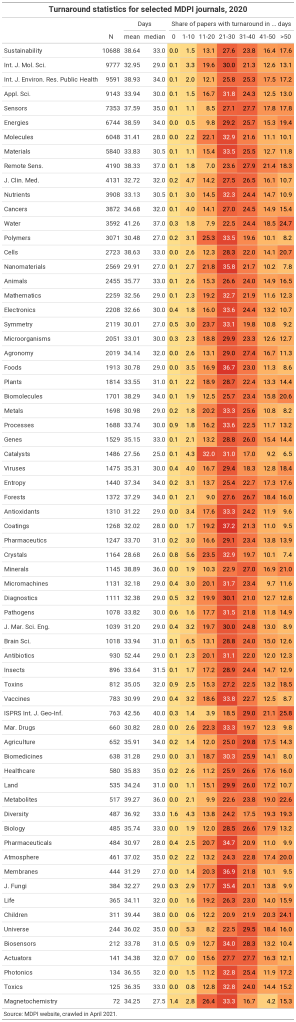

About 17% of all papers at MDPI in 2020 — this is 25k papers — are accepted within 20 days of submissions, including revisions. 45% — this is 66k papers — within 30 days. A (too much) detailed table in the data appendix at the end of the post shows turnaround times for all top MDPI journals. Its main point is to provide full info for particular journals, but also to highlight how little variance there is.

Is it bad to have very fast turnaround times?

Per se, no. Fast turnaround is clearly an asset, and MDPI shines in this. They did cut all the slack to referees and editors, and this could be a good thing — papers usually spend most of their editorial lives, unread, in the drawers of busy referees and editors (economists, I am again looking at you).

But cutting editorial and referee times is good for science only if the quality of the peer review can be kept up even at this very fast pace. A well written referee report might take several days. People are busy with their lives and do not have just referee reports to write; good scientists’ time is scarce. Editorial decisions can also take time. All but the smallest revisions require substantial amounts of time.

Again, I will expand below on the implications of these extremely short lags, but let’s note that lags have decreased for all journals in a coordinated way and are now at the lower limit of what is compatible with quality reviews + revisions.

MDPI’s growth: why?

How could MDPI grow so much?

Whenever something sells so fast, the answer is trivial: demand. What MDPI sells is obviously in high and growing demand. It wouldn’t be enough for MDPI to try to sell it if there weren’t scientists picking it up. But what do they sell that competitors don’t?

MDPI sells high acceptance rates and very fast turnaround times, in special issues that are very likely to match your specific field and ran by a colleague you know, within journals that have a decent-to-high impact factor and that count in the official rankings of several universities and public agencies in the western world. Exactly what scientists all over the planet, and especially in developing economies, need to keep competing in the publish or perish landscape of modern academia.

Getting into the details. Is acceptance high? it is at around 50% at most MDPI journals. While this is far from predatory practices (that would be 100%) and shows that MDPI journals do reject papers, acceptance rates are an order of magnitude higher than in most, say, economics journals.

Does MDPI cover all niches? The very fine grid of Special Issue guarantees that you’ll find an SI that seems tailored for you — if not, you can always accept MDPI’s invitation and tailor one to suit yourself. Impact Factor is high and the fact that the journals count in national rankings covers your back. It’s a winning game.

The Special Issue model is key in providing incentives to contribute effort and to submit papers. Doing some serious editing activity is a requirement for tenure in many countries, so mid-career scientists will find the offer appealing; it offers an easy way to publish papers from multi-disciplinary groups often required to obtain research funds. At the same time, you are more likely to send your paper to a previously not known journal if a colleague you know runs the Special Issue. Overall, the Special Issue generates incentives and increases trust in the system. It also motivates editors to convince fellow scientists to contribute.

The second key element is the high Impact Factor of the top MDPI journals. The system wouldn’t work if there was no quality indicator. The high IF is what covers the scientists backs with respect to their funding agencies, employers, and colleagues. Yes, it’s not Nature. Yes, it’s not an exclusive top journal in my field. But look it has a good IF and it is growing. [and look, Dr. X invited me.] Must be good.

The fast turnaround time and the high acceptance rate are the icing on the cake: you have very high chances of getting a publication in what for academics is the blink of an eye.

As a firm, MDPI should be admired for pulling this extremely effective strategy. MDPI created a handful of journals with high IF from scratch. They devised the SI-based model. They managed to cut all slack times to zero and deliver an efficient workflow — mean times from acceptance to publication are down to 5 days in 2020 from nearly 9 days in 2016.

Still, I think this model is not sustainable, and stand a high chance of collapsing. It’s simple, really: it will likely collapse because journal reputation is a common pool resource — and MDPI is overexploiting it.

An unsustainable, aggressive rent extraction model

MDPI is sitting on a large rent — it controls access to something that is in demand and for which it faces little competition. The rent is created by perverse incentives in the academic system, where publications in high IF journals is at a very large premium, since careers and research financing depend on it. Thanks to their past efforts, MDPI sits on several dozen journals with moderate-to-high IF and good reputations.

They could have chosen to continue business as usual, slowly increasing the number of papers and the reputation. This is what a traditional publisher like Elsevier would have done. Elsevier makes money by selling subscriptions. The value of the subscription is given by the gems in your journal basket. So you take care of the gems, and never let them lose their shine. But MDPI sells no subscription. It makes money by the paper, and so chose instead to exponentially increase the papers at their gem journals.

MDPI strategy boils down to exploiting their rent, as fast and aggressively as they can, through the Special Issue model. The original journals’ reputations are used as bait to lure in hundreds of SIs, and thousands of papers, in a growth with no limit in sight.

Despite the best efforts and good faith of MDPI and of all the guest editors and authors involved, the quality of journals that are inflated so much and in this uncontrolled way is bound to go down. Thousands of SI generate a collective action problem: each individual guest editor has an incentive to lower a tiny bit the bar for his or her own papers or those of his or her group. As we know from Elinor Ostrom’s Nobel Prize winning work, collective action and common resources need careful governance lest the system break down. MDPI has chosen to give control of the reputation of its journals to hundreds of guest editors, with just loose or no control from the original editors, who were responsible for the journal reputation in the first place and who are also in the process drowned by a spiraling number of additional board members. Even if the vast majority of those aim for quality, the very fast turnaround times make it harder. MDPI made things worse by sending mass spam invitations to nearly every scientists on the planet to edit special issues, thus increasing the chance of getting on board less than conscientious guest editors that will exploit the system. When the system showed to work (that’s 2018 and 2019), MDPI doubled down sending even more invites (that’s 2020) and recently flooring the accelerator and mass-inviting special issues (500 per journal in 2021).

This is not sustainable, because it is impossible that there are enough good papers to sustain the reputations of any journal at 10 SIs per day. And even if there were, the incentives are set for guest editors to lower the bar. Reputation is a common pool resource, and the owner of the pool just invited everyone on sight to take a share.

If MDPI were a music major, this strategy would amount to, first, putting in effort to sign a dozen of very popular bands, and then, once the reputation is in place ask their friends (or indeed anyone with a guitar) to produce more records under the name of the rock stars, for a low fee. Musicians of all levels jumped in. U2 this year released one album, but there are 400 invited bands releasing their albums under the U2 franchise. Buy the records, they are all as good as the original!

I don’t know why MDPI is so aggressive in rent exploiting. They could have done it more slowly, and still reap substantial profits. The former CEO Franck Vazquez said in 2018 when the Nutrients Editorial Board resigned that he “would be stupid to kill his cash cow” (source). This rings true: why so aggressively exploiting their rent, knowing that reputation can decrease fast if journals are inflated? Still, the data show beyond doubt that MDPI is milking its bigger journals at increasing rates.

Economics offers some cues as to why aggressive rent extraction might be the best strategy for a firm in the situation of MDPI. In general, when you have a rent but feel that it might soon vanish, it is your best move to suck it dry, fast. It is the economic reason behind the fact that movie sequels suck, or that second albums are usually worse than the first. It is not the only reason, of course. But developing a good, say, teenage dystopian movie increases the likelihood that other producers will jump in on the fashion and copy you; and each further movie exploiting your trope decreases your rent, giving you incentives to hasten the production of your sequel, and market it when it’s not ready. If it is easy to copy your model, you’d better be your own cheap imitation brand, rather than waiting for others to take that role (incidentally, this mechanism is at work also in the fashion industry, as masterfully described in Roberto Saviano’s Gomorrah and confirmed by this criminology paper).

Why would MDPI think that their rent will vanish? I don’t know. I suspect it might have to do with the recent move to Open Access of the main traditional publishers, that are opening OA subsidiary of their main non-OA journals. Or to the increasing acceptance of repositories as arXiv as legitimate “publications”, that would make the whole “publisher” concept history. But that’s just a hunch, I have really no clue.

Whatever the reason, I think that MDPI is aggressively exploiting its rent. It is not predatory in the sense of publishing everything for money. Still, it is a misleading and dangerous practice. The reason why academics are puzzled by MDPI, and you can find people defending the quality of the papers and the special issues they read and/or were involved with and, at the same time, people that completely loath MDPI and deem it rubbish and predatory, is that MDPI is both things at the same time.

The problem is that bad money always crowds out good money. With MDPI pushing the SI model faster and faster, the balance will shift sooner rather than later towards deeming MDPI not worth working with.

What if I am wrong?

I might be wrong. There are many good sides of the OA model that MDPI adopts.

It is more inclusive, for one. It breaks the gate-keeping that is done by the small academic elites that control the traditional journals in many disciplines. It probably makes no sense in the 21st century to limit the number of articles in journals to, say, 60 a year, because back in the 20th century we printed the journals and space was at a premium, so breaking the (fictional and self-imposed) quantity constraint is a good thing.

All the above rings even more true for academics from the global South, that face even higher hurdles to publish in the rather closed and limited traditional journal. Mister Sew from Ethiopia has provided me with several references on how and how much traditional publishers exclude researchers from the Global South. Non Euro/West researchers account for as low as 2% of published papers, and have virtually no representation in editorial boards. Some traditional publishers deny access to IPs from several African countries. References here, here, here and in a special collection of articles on the role of OA for the global South here. MDPI features a much higher share of editors and papers from the Global South, and is as such a liberating force. At the same time, MDPI publication fees are very high and unattainable by researchers from the Global South.

The special issue model is also very good in many respects. It has potential to be a clever way of organizing science in the digital age. We still live within the empty shells of the 20th century way of publishing science. That gave us another form of rent extraction, traditional publishers with their incredibly high margins on the back of scientists. A more open form of knowledge exchange, leaner, open access, and organized around topics rather than journals can be promising.

Fast turnaround times across all journals could be a sign of real productivity and logistic advances from MDPI, without affecting the quality of reviews. Those would be extremely welcome and a breath of fresh air in academic publishing.

Volker Beckmann in the comments made also several arguments as to why in his own view MDPI quality is not going down and will not decrease in the foreseeable future. Check his points out in the comments.

If you have more thoughts on this and you think I’m wrong, I am happy to hear your thoughts. If you are from MDPI and want to reply, I am even more happy. This (too) long piece is meant as an exploration of an intriguing topic. And to scratch a personal itch, that I got when invited to guest edit for Nutrients, last summer, and was surprised to see how MDPI could be at the same time considered very good and very bad by colleagues.

Methods, data and code

The scripts to reproduce the analysis and the data are available on the dedicated github repo. The scrape was performed in march 2021, for the number of SIs, and in April 2021, for the turnaround times. All data is publicly available on MDPI’s website, and I just collected and analyzed what is otherwise public. I would like to thank Dan Brockington for extensive discussion, encouragement, and comments on an early draft of this post. Thanks also to Joël Billieux, Ben Greiner, David Reinstein, and all the colleagues on the ESA-discuss forum for discussions on MDPI and publishing.

Additional Tables and Data

Journal growth, Special issue growth and share of SI articles on total articles for selected MDPI journals

Turnaround times at selected MDPI journals

Great and interesting article. I am an associate editor in an MDPI journal and what is very professional is the organsisation of the reviewer finding process, which may be part of the success story to align turnover times. The MDPI IT system suggests lots of reviewers names from a large data base which the associate editor either selects or he/she suggests others. Then – in theory – an MDPI fellow is approaching the reviewers whethe rthey would be willing to do the review.

Recently, I was not convinced at all by the expertise offered by the data base and suggested 8 scientists or so what I think are peers to be approached that were not listed in their data base.

The reviews returned back very quickly and were very short and of poor quality. I realized that none of the reviewers I suggested was approached to do a review. I asked why and learned that the MDPI team had already sent out the manuscript for review to their preselected reviewers before they asked me.

I was very much annoyed about this and they apologized for this misbehaviour but I doubt this was a mistake but common practice if things may take too long according to their rules ( I was not late with my response but responded perhaps 2 days after I received the request). I did not resign yet but I will carefully watch the future review processes and make sure that no predicision was made.

A thing that I noted, and got anedoctal evidence as well, is that turnaround times are “rigged”.

A major revision from another journal (sometimes) becomes a “reject, but resubmit and get the same reviewers”. The submission time then becomes that of the revised version not that of the original version… and of course this cuts the acceptance time much shorter than otherwise possible.

It is a bad practice (let’s call it false advertisement), but at least it should not hurt the science.

Anyway, thanks for this article, I was pondering the issue a lot, given the fact that I published on SI and acted as a reviewer (with some very far off the mark review requests).

Thanks a lot for your interesting analysis. You mainly argue that it’s impossible to handle so many Special Issues and keep up the quality. I disagree.

MDPI has developed rules that work effectively and can secure quality of Special Issues even at large scale. You did not recognize these rules in your post.

You wrote: ”Thousands of SI generate a collective action problem: each individual guest editor has an incentive to lower a tiny bit the bar for his or her own papers or those of his or her group.”

This diagnosis is wrong.

Among others, Guest Editors are not allowed to make any decision on manuscripts that are written by themselves, by members of their group or by close peers. See: https://www.mdpi.com/editors Decisions on those manuscripts are made by the Editors-in-Chief or other Editorial Board Members (EBMs). As an EBM of Resources and Land, I regularly make these kinds of decisions and secure the quality, but also support the development of Special Issues. I’m happy with that.

You argue that Guest Editors (GEs) devalue the work of the “original” editors. You wrote: “MDPI has chosen to give control of the reputation of its journals to hundreds of guest editors, with just loose or no control from the original editors, who were responsible for the journal reputation in the first place and who are also in the process drowned by a spiraling number of additional board members.”

This diagnosis is wrong again.

Special Issues are important right from the start of a journal and a central task of an EBM is to support the development of Special Issues. GEs and EBMs co-evolve at MDPI journals. To be sure, these are distinct positions and GEs and EBMs have different roles. Thus, GEs are not necessarily EBMs and EBMs are not necessarily GEs, but there is quite some overlap. As already said, as an EBM I support the development of Special Issues and I’m happy with that. One reason is that I also will be supported. As a GE I get support from other EBMs if I or members of my group or close peers submit a paper to a Special Issue I’m editing. By support I mean that some other EBM is willing to make rigorous editorial decisions. By the way, in these cases GEs do not know the identity of the decision maker (EBM) during the process. That’s very important.

And there is another way to escape the tragedy of the commons. Maybe you recognized that the names of the Academic Editors (the decision makers) are now written on the manuscript. This creates clear responsibility and accountability.

You also claim that many Special Issues are recruited by “predatory” mass emails. Actually, I doubt this a bit. I was invited for one Special Issue and initiated three more without any further invitation. It seems that this is a typical pattern: GEs make good experiences and initiate from time to time more Special Issues in their field of expertise on their own. You can see this pattern if you scroll through the list of EBMs. This tells a different story than yours.

As a GE for MDPI journals you get extraordinary support from the in-house Assistant Editors, but also from EBMs. From my experience I would say that’s the main reason why Special Issues at MDPI journals are so popular and developed into the growth engine of many MDPI journals.

Despite all the growth, please note that the market share of MDPI in the total number of publications was only 3,74% in 2020. See: https://www.scilit.net/statistic-publishing-market-distribution

Thank you for your feedback.

Volker, since my tweet has attracted considerable attention I took the liberty to advertise your comment and your different view on twitter too, so it gets the same visibility as my post. I will think more about your points and you surely have much more insight since you see things from the inside. The tweet is here: https://twitter.com/PaoloCrosetto/status/1382049674111123465?s=20

Excellent analysis. Could we interpret this as seeing MDPI as some sort of publication pyramid scheme? At one point it is bound to collapse, and we could see four potential scenarios: MDPI goes full predatory, MDPI downsizes significantly by focusing only on a few flagship journals (most journals will lose their IF), MDPI gets sold, or MDPI becomes a cheaper version of PLOS One, but just being one journal. MDPI’s strength was that it became a voice of hundreds and thousands of scientists from the Global South who could not get into subscription-based journals, because of various reasons, the sad thing only is that they are being exploited now by the numerous APC charges….

Paolo, please note that your analysis also ignores developments in the broader publication market.

Ever since I was invited in 2013 to guest-edit a special issue in Sustainability, I followed the development of the publisher. At that time MDPI published 9.852 articles. It was not listed among the 20 largest publishers, but it reached rank 11 for open-access articles. In contrast, Elsevier published 591,872 articles in 2013 of which 96,552 articles were published open access. Elsevier was the leading publisher worldwide, also the leading open-access publisher. In 2020, MDPI has published 163,831 articles and became the fourth largest publisher and the largest open-access publisher. Elsevier published 797,348 papers in 2020 of which 140,354 were open access. Elsevier still is the largest publisher, but now only ranks third in terms of open access publishing.

Thus between 2013 and 2020 Elsevier increased the annually published papers by 205.476 whereas MDPI did it only by 153,979 papers. Sure in terms of growth rate, the MDPI story is impressive, however, in absolute terms the growth was less than that of the market leading publisher.

This is to put things into relation.

The general market for publications is growing due to multiple reasons. The number of papers published increased from 2,891,795 in 2013 to 4,376,690 in 2020. Thus MDPI managed to grow faster than the market, but still the market is very large and the market share of MDPI was only 3,7% in 2020. Thus, there is still a lot of potential to grow in a competitive market.

Please note that all of the above data were retrieved from SciLit, a scholarly publication search platform maintained by MDPI. https://www.scilit.net/ This platform also contains under “Rankings” very useful information about the publication market and about publishers and individual journals.

You will see that phases of exponential growth like Sustainability can also be found with the Journal of Cleaner Production or Science of the Total Environment.

https://www.scilit.net/journal/1003346

https://www.scilit.net/journal/288389

https://www.scilit.net/journal/389653

To be sure, there is no exponential growth that can be maintained forever. See the developments of Plos One or Scientific Reports.

https://www.scilit.net/journal/242073

https://www.scilit.net/journal/32605

As I said, you need to put things into relation.

By the way, SicLit is totally free of charge, it contains very useful information for scientific communities. It was invented and is maintained by MDPI. Now comes the question: Why should a company that according to your analysis is “ aggressive rent extracting” or “shift towards more predatory over time” set up such a platform? Why should they set up Preprints? https://www.preprints.org/ Why should they set up an Encyclopedia? https://encyclopedia.pub/

I think your analysis reveals a deep misunderstanding of the company’s objectives.

dear Paolo

your article is very interesting, for some time I have been trying to understand this phenomenon that does not only concern MDPI, but also Frontiers and associate journals from the important journal (scientific report for example).

I wanted to point out an important factor that you may not have considered, is that there is a positive feedback effect of these papers on the impact factor that is similar to inflation in failing economies that print paper money.

more articles published = more citations placed on the market (1:50 as a minimum), these citations increase the impact factor of all journals, but in particular of those MDPI that self-refer. But it can’t work! sooner or later the system collapses! now in the international academic world, it is clear that you cannot build a CV with publications in these journals, it only works in countries with poor career growth systems such as Italy or the emerging world.

As an editorial assistant for two of MDPI’s special issues in the humanities, I can only report on that part of the elephant with which I am familiar — and my experience is that the articles we published were earnest and carefully-prepared contributions to their respective fields. There has been an implication that special issues are in some way underhanded — but why should we not take advantage of the unlimited capacity of the internet to explore our vast universe in a way that has never before been possible?

G. W. (“Glenn”) Smith

Dear Volker Beckmann

Your point about product extensions (SicLit, Prepints, etc.) may contradict Paolo’s ‘feeling’ (and mine too) that MDPI is milking the cow (or even killing the goose that lays the golden eggs). Or not…

Since you appear to know more about MDPI’s strategy, how do these crazy numbers of Special Issues fit in a long run strategy?

Do you also have an explanation for the fact that, in the last four months or so, I keep receiving emails such as this:

“Given your impressive expertise, we would like to invite you, on behalf of the Editor-in-Chief, to join the Editorial Board of Digital as our Topic Editor”. I have no expertise neither in the subjects that should be covered by Digital, nor in the other handful of journals they keep spamming me to edit.

Regards.

My replies to all of you above. First, thanks for commenting, and providing insights and debate.

Stefan, Marco:

thanks for the insider view. Your comments are part of mounting anecdotal evidence that in order to deliver on their promise of short times MDPI staff is heavily involved in editorial handling and sometimes decisions. This is worrisome. They also cut corners and different people on Twitter as well pointed out that if a revision takes too long it is rebranded as a new submission. This is mostly harmless, but it depends also on how the rejection statistics are computed.

Volker:

thanks for your insight. I might be wrong with respect to the details of the process. For instance on the level of control that Editorial Boards have on Guest Editors you surely know more than me and I take your point — there is probably more control than I thought there was. On the amount of spam, though, I am rather sure that you don’t see it because you are not a target of it. Several colleagues went public showing their inboxes full with up to 4 or 5 invitations per week, often in unrelated fields. MDPI seems to send direct messages on Twitter also. The spamming strategy is clearly there and is clearly increasing in scope and volume. Also about the returning guest editors, I have no reason not to believe you so I’ll take your point: many SIs can be edited by returning guest editors that had a good experience. But that cannot explain the exponential growth. in 2021 there are 7 times as many SIs as in 2020. How many returning GE can there be, and can all of them have returned 7 times?

My point here is not that quality is currently bad: it is that with the level of invitations and of growth they are trying to achieve, it is most probably going to decrease. I can see we disagree on this, but I’ll stick to my point till I see evidence of the contrary.

Andy: I think it has elements of a pyramid scheme, but is not one per se. They are using aggressive marketing strategies on their own journals, this is for me now sure. And aggressive marketing means also discounts, efforts to get into the scientists’ networks… so it looks like a pyramid. Re. the Global South, I have now added a box up top with references. Indeed, MDPI is doing a lot more for the South than traditional publishers. Does still mean to me they shouldn’t hijack their own journals for short term profit.

Andrea: thanks for the analogy, spot on.

Regarding the SI issue, I think the analysis exaggerates. Many papers are published in SI of MDPI journals but originally were not submitted to SI

Thanks for taking the time to analyse this data. And for the nice plots!

The homogeneity of how they (MDPI) reduce the submission to acceptance rate across the board comforts me in my thought that there is deliberate manipulation here. From my experience as one-time guest editor, when a paper comes back with major revisions, they explicitly ask you to label them as “Reject and resubmit” if the major revisions cannot be addresses in less than 10 days or so, thus resetting the time between submission and acceptance.

In my view, MDPI’s success was to be early in exploiting the lack of OA alternatives when these were rare. For instance, in my field of Remote Sensing, me and several colleagues looked positively at the arrival of a fully OA journal dedicated to the field (even before it had an IF). This effectively encouraged many to send out decent papers there (me included). A couple of targeted special issues curated by high profile scientists further increased the confidence in this as a valid solution. There was even one that provided an early access to a very useful dataset in Earth System science, which stimulated many good papers to be submitted there based on that data. The IF arrived and encouraged more people to submit work there.

Yet, as the success of the journal (called “Remote Sensing”) rose, it also became evident that they were publishing studies of very variable quality. As a reviewer, I realized that when rejection was recommended (with good arguments), the paper would still be published. What became clear was that if a paper was submitted there, there was a (very) high chance that it would end up being published sooner or later (and if later, the “reject and resubmit” strategy mentioned above would ensure it would still be done in a short amount of time). When early career scientist and students are required to have a list of papers in their CVs, MDPI ends up being a pragmatic solution for them.

However, I think this is now backfiring. Not necessarily for MDPI, who is still cashing in, but for the researchers who have inflated their CVs with such publications. When I see a CV with many MDPI papers listed, I cannot help and think negatively of it, despite knowing that some of those papers might be good.

Another smart tactic of MDPI has been to exploit the Special Issue card, as you have shown. Awarding a badge of “guest editor” to early career scientists is a very good incentive for them to do the work (for free), including inviting and convincing peers to submit good papers, and it would also look good on an academic CV. However, now it seems literally anybody can be an MDPI “guest editor”, so the alleged prestige that goes with it has been lost too. This further goes in line with your suggestion of an aggressive rent extraction model.

Kind regards,

Greg Duveiller

If you want to understand MDPI as a company, please check out this blog

https://www.mdpi.com/anniversary25

Dear Beijokense,

Thank you very much for your response. Would you be so kind and disclose your full name? Unfortunately, I can’t find any academic named Beijo Kense.

As to your questions.

If you call the number of Special Issues “crazy” you follow the mental model of traditional journals. Working with Special Issues is a building block of MDPI’s journal development. Take Digital, a newly established journal and the example you mentioned. https://www.mdpi.com/journal/digital They have published up to date 8 papers in total. One paper is part of a Special Issue. They have currently 8 Special Issues open for submission. https://www.mdpi.com/journal/digital/special_issues

This journal and its Special Issues will co-evolve. As the journal grows the number of the Special Issues grows (or you argue the other way round). That’s what I mean by co-evolving. Sustainability has published 4069 papers in 2021 up to date. How many Special Issues are open? According to Paolo’s research it’s 3178. The principle does not change. Special Issues allow you to manage small projects as parts of a larger project. This works at a small scale, it works at a large scale. Sure, you need rules that prevent the abuse of the system. As I explained, these rules are in place at MDPI.

Why does MDPI invite researchers to edit Special Issues? You can also see it in this way: They want to support scientists all over the world. They want to accelerate open access to trusted knowledge. They want to change the game of publishing. They want to grow into a major publisher. You may want to read the interview with the MDPI’s CEO: https://www.mdpi.com/anniversary25/ceo

Is there any limit to growth? Sure, exponential growth can’t be continued forever. However, the largest journal in 2020 was SSRN Electronic Journal with 39,238 papers followed by Scientific Reports with 22,437 papers published. See https://www.scilit.net/statistic-journal Sustainability, as the biggest MDPI journal, has published 10,669 papers in 2020. So there is still some room for growth. The same holds true at the level of publishers: In 2020, MDPI has published 163,831 articles and became the fourth largest publisher and the largest open-access publisher. Elsevier, the market leader, published 797,348 papers in 2020 of which 140,354 were open access. https://www.scilit.net/rankings So again, there is still room to grow. Nobody knows precisely where the limits are.

As to the emails. Yes, all researchers get many emails every day from different publishers inviting us to submit to their journals, reviewing for them or becoming editors. I agree that many of these emails are spam, they are not targeted, they are more or less clearly from “predatory” publishers. I delete most of them immediately. Sometimes I check if the publisher is trustworthy. However, also I get a lot of invitations to submit or to review a paper from traditional journals and publishers, e.g. Elsevier, Springer, Wiley, Sage, etc. I consider them, but often I ignore (invitations) or decline (reviews) them due to time constraints or because it simply does not fit my profile. Some, however, I accept.

Serious invitations to become a (guest) editor I have received only from MDPI, Frontiers, and Sage journals so far. MDPI and Frontiers use similar, but slightly different inclusive editorial models. MDPI works with Special Issues and Topics. Frontiers works with Research Topics. When I receive these invitations, I consider them carefully, but often again it does not fit or I don’t have the time. Some, however, I have accepted. Sure, sometimes these invitations really don’t fit my profile or interest (there is always some fuzziness unless you know the people very well). In these cases, I just ignore or decline. However, I’m happy to know that these options exist. Without their emails, I perhaps wouldn’t know. To be sure, without their invitation I wouldn’t have started my first Special Issues in Sustainability in 2013. For me this was the beginning of a process to learn a lot about academic publication from the perspective of an editor.

Kind regards,

Volker

Thanks Volker Beckmann.

Emails as the one I cited, I only got from MDPI. As you can see from my profile, I have no ‘expertise’ in the fields of these journals:

Digital

Sensors

Education

Animals

Water

Mathematics

And I’ve received emails from MDPI telling me that I am expert on these matters and suggesting me to be a Topic Editor. I get emails for Elsevier, T&F, etc. because I opt in (usually when submitting a paper) to receive news on the fields I’m interested in, so I don’t feel spammed by them.

I concur with Paolo’s view that these massive ‘invitations’ will certainly decrease the quality of published papers, because you’ll get lots of Topic Editors that have little or no knowledge in the field and a poor publishing or review record. This, combined with a questionable review policy (you can find in Publons people reviewing an MDPI paper every other day), results in manuscripts being really poorly edited.

Best regards.

My profile: https://scholar.google.pt/citations?user=wuGfIBcAAAAJ

Dear Carlos,

yes, specifically these kinds of emails you could probably only get from MDPI. And only in the last four to six months.

The reason is that the position of a Topic Editor was just recently introduced. So MDPI journals obviously sent out lots of invitations to advertise this new position. Indeed, now almost every MDPI journal does not only have an Editorial Board, but also a Topic Board and a Reviewer Board.

See the example of Sustainability:

https://www.mdpi.com/journal/sustainability/editors

https://www.mdpi.com/journal/sustainability/submission_reviewers

https://www.mdpi.com/journal/sustainability/topic_editors

Related to the topic board, MDPI journals now also offer the possibility to edit not only Special Issues, and Topical Collections, but also Topics which can include multiple journals. See:

https://www.mdpi.com/topics/fruit_vegetable

https://www.mdpi.com/topics/hydroinformatics_data_science

Within the list of topic editors you can find researchers who are also potentially willing to collaborate on a specific topic. That’s a great idea. Let’s see how this will develop.

Thus, this new position, which was only introduced recently, probably explains the many emails that were sent.

Obviously, you did not accept any of the invitations you got. This is exactly what I would expect in your case.

As one colleague put it recently: “researchers have brains”! They can think, they can judge. Why should a researcher accept an invitation which is not in her/his field of expertise? Why should a completely inexperienced researcher accept such an invitation? I don’t think that this will happen often, if at all.

What if you would have gotten an invitation of Tourism and Hospitality?

https://www.mdpi.com/journal/tourismhosp

But yes, there are some risks of having a many and diverse editors. You need to have good mechanisms in place to secure quality. I mentioned a few that are in place at MDPI journals. One is that the names of the editors are listed on the paper, which creates individual responsibility and accountability. This is also done by Frontiers journals or by Plos One, among others (both work with very large editorial boards). From my experiences, alone this mechanism works quite well to secure quality. And there are more mechanisms in place.

Kind regards,

Volker

This is a much needed analysis of MDPI journals. MDPI is especially damaging to early career researchers as many researchers instead of going for top/reputed journals in the field just publish in MDPI journals and then brag about their number of publications. I know so many of my fellow colleagues whose papers got rejected by reputed journals in my field, they sent the same articles without any modification to MDPI journals and got accepted in a matter of few days. I won’t say that all MDPI journals are predatory but their practices are highly questionable (one of my colleagues paper got rejected from at least 5-6 quality journals but got accepted in a special issue of a so-called-high-impact-factor-MDPI-journal for which his own professor was a guest editor). Also, impact factors alone can never be a measure of journal’s reputation; the journals that publish open access journals usually have higher chances of having higher impact factor.

Thank you very much for interesting analysis and (at least for me) novel view on the issue.

I work in environmental sciences, my WOS record currently counts 50 publication, out of them about 30% in MDPI journals (silver goes to Elsevier with 20% and bronz to Springer with 16%). Thus reputation of MDPI matters to me.

Positive points for MDPI:

1. The open-access fees are reasanable in comparison to Springer and Elsevier. Some providers of research fundings demand open-access publications and the grants are not unlimited.

Here I would propose a different view on what is “predatory”. Elsevier and Springer systematically gather journals published so far by universities or other research institutions which were previously web-online without any payment (in fact free-open access, examples e.g. IJEST, Folia Microbiologica, Chemical papers…). Now the big players sell the literature to us researchers within the subscription (not-free) and as a bonus (for them only) they offer highly expensive open-access. Reviewers work for free, editors dominantly for free and the reveneus of these publishers are high and they are not returned back to science in high proportion (exception is e.g. Oxford). I consider this more predatory than what MDPI does.

2. Speed of publishing.

MDPI realy mastered the review process, dominantly eliminated the neccessary “waiting for action”. I have served as guest-editor of two special issues for MDPI and I serve long-term as an associate editor in Springer journal IJEST. Here we ask reviewers to finish the review within two weeks (while MDPI demands 7-10 days), nevertheless we achieve total review process in many months, while MDPI in weeks. The difference is obviously not in reviewers, but in the editorial process and majority of the time is wasted. Professional editors handle the manuscripts immediatelly, while me, as a volunteer-editor do it in my spare time, which I do nt have much… Editor-in-chief usually distributes new submissions among associate editors in clusters of 4-8 submissions. I am not able to process them (i.e. precheck, reject on average 1/3 directly and find reviewers for the rest) in less then one week. Also MDPI has got much better system for finding reviewers. This enables searching for reviewers in much more precise parameters, while in Springer we have quite wide indicators of reviewers’ expertize. This leads to sending submissions to inapropriate reviewers and high decline-rate and consequently longer time. In MDPI also the professional editor does part of that job for you. The guest editor can add other reviewers, decline some or change the order. This saves a lot of time and speed up the process.

3. Rejection rate. Rejection rate of MDPI is obviously lower compared to established journals. Thus if you get your manuscript rejected a few times, MDPI presents higher chance of acceptance and publishing. In the academic career there are many occasions when you need paper published (Ph.D. defence, approaching end of project, habilitation requiring defined minimal WOS record etc.), when combination of rejection rate and speed of publishing is useful.

This however does not neccesarrily mean lower quality of research. Sometime I have the feeling that the top journals act as “elite club”, where youngs, souths, easterns etc. Are preliminary declined. There is also the mantra of supernovelty, which for example case studies are not (and it is useful if they are published). Let the readers decide.

Also consider that not all researchers have the ideal instrumentations, complex team, can afford repeating experiments 7 times (ideal for statistical evaluation) etc. These have low chance in cometition to top teams with high grants, however that does not matter the data are not worth publishing.

Why am I a bit affraid?

I generally do not have concerns about the review process in MDPI higher than in other non-MDPI journals.

Experience as author: Majority of my MDPI submissions had three or four reviewers and with one exception they all were clearly qualified in the fields, their comments were useful and led to improvement (or deserved rejection). This is more than in other journals where the standard is dominantly 2, I was accepted in SPringer and Elsevier (Q1 and Q2 journals) even with a single review only.

When I published in “my” special issue, the review process was carried out by the editorial board members, here is no conflict of interest.

Experience as reviewer: I have reviewed about 50 manuscripts for MDPI and in majority of cases “reject” meant reject (in exceptional cases I was overvoted by other reviewers and major revision or two rounds of revisions were carried out).

Experience as editor: Here I really like the system for selection of reviewers. What I do not like is the fact that in case of unity between reviewers, the professional editor sends the manuscript for revision (and in case of major revision for second review round) WITHOUT the act of the acadeic editor / guest editor. I can only finally confirm the acceptance (rejections are carried out by professional editors) or initiate a new round of reviews. Here more power is left on reviewers than editors.

Compared to other journals I can see here a bit more space for “review leaking”, i.e. inappropriate guest editor or combination of more inappropriate reviewers that would not be capable of finding the major drawbacks (mistakes, frounds…) of the manuscript a let a poor or even fraudent manuscript to be published. However overall I consider the review process correct and effective.

What is a bit suspicious is also the practise of reject + resubmission. This can lead to higher presented rejection rate lower editorial times compared to reality.

I do not consider the mechanisms of special issues as problematic as far as the publisher selects appropriate editors. Compilations of compatible paper to one issue increases visibility. Also consider the fact, that many papers go through “standard submissions” and they are assigned to speciall issue later. And also consider that in case of immediate publishing, the issue, either standard or special, have not much meaning. You can always browse based on the time of publishing, topic or just search what is interesting for you.

Fot the conclusions I should also add that I am a big fan of open-access, which dates back do 90ties, when as a student I had to visit many university libraries to reach for all published but generally unavailable literature.Paying 40 USD for a single paper is impossible for a student, pirate services like sci-hub were not available. As a research and also part of university management, I consider open-access as very good solution to availability of literature, general costs of it and also for dissemination of the results ti wider auditorium than just researchers (profesionals, industry, media…). MDPI offers good product for a cheap money. As far as the editorial / review process will be kept rigorous (i.e. before significant frouds will be revealed) I will keep submitting to MDPI.

Dear Paolo,

Please note that with the new data your story about the exceptional role of Special Issues for MDPI journals growth is collapsing (at least for the ex-post analysis).

According to your calculation, the number of papers published in Special Issues increased 7.5 times between 2016 and 2020. All other papers increased 7 times in the same period (if I calculated correctly from your diagram). This is almost the same growth rate.

Thus, it is rather as I already stated: papers in Special Issues and in others parts of the journals co-evolve.

Papers assigned to Sections and Collections must be treated as “normal” papers. Sections and Topical Collections are structures which are permanently open for submission, unlike Special Issues (and newly Topics).

Section Editorial Board Members (and most Collection Editors) are general Editorial Board Members. This is often not the case for Guest Editors.

So your analysis is partly based on a misperception of “normal” papers and “normal” issues.

What is normal?

You seem to refer to traditional (print) journals which can be quite misleading when looking at modern journals.

MDPI journals publish papers continuously as soon as they are ready. However, every paper is officially assigned to an issue. MDPI journals publish either 2, 4, 12, or 24 issues of a journal per year. The number of issues is related to the journal size. Also the number of Sections is related to the journal size. See

Challenges 2 Issues: https://www.mdpi.com/2078-1547/11

Oceans 4 Issues: https://www.mdpi.com/2673-1924/1

Land 12 Issues: https://www.mdpi.com/2073-445X/9

Sustainability 24 Issues: https://www.mdpi.com/2071-1050/12

The issue number appears on the paper, in addition to the volume number and the article number. That’s very traditional, except that article numbers are used instead of page numbers.

Now, one issue is composed of papers assigned to Sections, Collections, Topics, Special Issues or of papers that are not assigned to any of those. This creates structure within an issue. And in general it creates structure within the online journal.

You define papers that are not assigned to any of these categories as “normal”. In doing so you follow the logic of traditional (print) journals which simply does not fit to new types of electronic journals.

New electronic journals can easily create overlapping structures. A paper belongs to an issue, but also to a Special Issues, which belongs to a Section. A paper might just belong to an issue and a Section. Or it belongs to an issue, a Topical collection, which belongs to a Section. A Topic can cover different journals. There quite some combinations possible. In any case the article identifier for MDPI journals is related to the issue, not to a section, topic or special issue.

Thus the whole idea that there are “normal” papers or “normal” issues at MDPI journals is quite odd.

How to distinguish the different structural elements?

Sections – give general structures to the journals, often have a Section Editor-in-Chief, a Section Editorial Board, a Section Topical Board, are constantly evolving, no deadline for submission, Topical Collections and Special Issues can belong to Sections.

Topical Collections – have Collection Editors (who are often Editorial Board Members), focus on a broader topic, no deadline for submission.

Special Issues – have Guest Editors (who might or might not be Topic Board or Editorial Board Members), are considered as projects with a defined deadline for submission, papers are published immediately once they have passed successfully the review process; Special Issues are evolving over time until they are closed; Special Issue can be transformed into a printed book.

Topics – have a Topic Editor-in-Chief and Topic Assistant Editors, cover different MDPI journals simultaneously, are considered as projects with a defined deadline for submission, papers are published immediately once they have passed successfully the review process; Topics are evolving over time until they are closed; Topics can be transformed into a printed book.

Thus, the distinction between Special Issues and Normal Issues don’t fit the reality of modern MDPI journals. It’s more complex.

Or it’s very simple: every paper is assigned to at least one Academic Editor (this can be an Editorial Board Member, Collection Editor, Guest Editor, or Topic Editor) who is responsible for making decisions. Her/his name appears on the published paper. Editors are not allowed to make decisions regarding their own submissions, submissions of members of the same institution or submissions from close peers. Editors-in-Chief are decision makers of the last resort (except for their own submissions, submissions of members of the same institution or submissions from close peers).

Kind regards,

Volker

P.S.: Please note that Frontiers has a similar but slightly different structure. Frontiers journals don’t work at all with issues. A paper is assigned to a volume and has an article number. Every larger Frontiers journal has Sections. Sections, however, can also cover different journals. Every journal has Research Topics (similar to MDPI Special Issues). All Frontiers journals together have probably ten thousands of Research Topics open for submission currently. Sections and Research Topics also create a complex overlapping structure. A paper is part of a volume, but in addition potentially part of a Research Topic and/or part of a Section. There will also be papers that are not part of Research Topics or Sections. As with MDPI journals, every paper is assigned to one Academic Editor. The name of the Academic Editors appears on the journal. Different from MDPI journals also the names of the reviewers appear on the paper. In Frontiers journals Editors and reviewers are members of the Editorial Board. This is also different from MDPI. As a consequence the Editorial Boards of Frontier journals are usually very large, even compared to MDPI journals.

Take Frontiers in Psychology as an example: https://www.frontiersin.org/journals/psychology

30 sections

572 Research Topics open for submission

11,290 Editorial Board Members (as of May 1st, 2021)

3803 paper published in 2020

1683 papers published in 2021 (as of April 26th, 2021)

Thus, also Frontiers journals are modern and inclusive journals that don’t fit the traditional categories. They are also growing fast.

Thank you for an excellent review. Just some thoughts of an author who has not yet published with MDPI but is about to do so for the following reasons.

As you say: “Elsevier makes money by selling subscriptions”. And hereafter good manuscripts are rejected for wrong reasons. Elsevier journals with lower rankings than MDPI journals pride themselves with acceptation rates of a few percents. Why not, the service has been paid for. Without subscriptions, Elsevier’s article processing charges (APC) outweighs those of MDPI (sometimes with a factor 2) for open access publishing.

I understand why MDPI is so successful. As you mention: MDPI has reputable journals (rankings) and publishes good quality papers. Compared to other journals its APC’s are reasonable. Turnaround time is fast. And most important, MDPI is inclusive compared to journals who only want to facilitate a select circle of established research groups. It is time for a change.

Excellent analysis, Bravo! Very useful for me. Today I received an invite from Universe journal to review an article. It was the first time I heard of this journal . So I asked a person very close to me, who is Editor of a major journal in the field, for an opinion. But this person did not know the journal neither. Universe has an IF = 1.75 which is not bad for an almost unknown journal. If I accept I have only 10 days to answer, which is far to few for me. It is not that I use 20 days to answer, but that my agenda is too busy and I need more time to find 8 hs that in average takes me to write a report. Finally, MDPI is in general too expensive for a third world country. Traditional journals with even higher IF, with the old scheme of subscription and page charges, are very much less expensive. There are some you don’t pay at all (think of Monthly Notices of the RAS, with IF=4) And, last but not least, if you come from a developing country, and you have an accepted paper in a traditional journal, you can always ask to not pay because your science agency does not pay page charges. It normally works. There are people who consider this a dishonor, but others find it the way collaboration works. And I know very good scientists that made their professional careers without paying a Penny for publishing.

Great article Paolo, so interesting and so well supported by data. I had one unpleasant anecdote with an MDPI journal. After an invitation to submit with full APC waiver, the company requested payment. I showed the original invitation signed by them, and got a simple response: sorry, our policies have changed. As you can guess, my manuscript went elsewhere (and got accepted).

I can only add a small piece supporting the hypothesis that the only interest of MDPI is to gain more and more fees.

I was contacted to serve as a co-guest editor. I accepted since the main guest editor was a friend of mine and a well-respected scientist in my field. From the very beginning, the managing editor of MDPI made clear that we MUST collect an X number of papers (I don’t remember exactly the number), otherwise the special issue would have been erased/closed. We invited our colleagues to participate. When a submission was done, guest editors were requested to make an initial decision based on 4/5 criteria, all very easy to fulfill (pertinence to the issue, decent English, and so on). Then, in case of a positive initial decision to proceed further, a list of potential reviewers were suggested by the managing editor to “help” and guide our choice. The majority of the suggested experts were young investigators with few papers and a low- to very low HI. I tried to suggest more pertinent reviewers (in a dedicated space), but none of them was contacted (I asked to some of them to check). I complain about this aspect with the managing editor but received no answers. The quality of the review reports, overall, was very low. With these items in our hands, we were asked to take a decision of acceptance/reject after the first round of revision. No further revisions were allowed. The majority of times, our final decision was “respected” by the managing editor. However, 2 decisions about 2 papers, one acceptance and one rejection, were overturned by the managing editor, which changed our decisions without advising us before sending the communication to the authors. I complained again, and this time I received a response from the managing editor, which explained me his/her motivation, clearly evidencing that she/he had read the reviewers’ comments and basically took a decision on his/her own.

As one of the comments stated, one paper that included one of the guest editor as author was not managed through this process, but followed the normal procedures for regular submission, and it was accepted after asking for minor changes.

Overall, 9 papers were published while 3/4 were rejected. The rejected papers (including the one with the overturned decision) were almost all submitted closely to the end date of the special issue (when the requested minimum of published papers was likely reached).

Two days ago, less than one year after the closing of such special issue, I received an e-mail from MDPI asking us (it was forwarded to me and to the other guest editor) to set up another special issue which should represent the follow-up of the previous one. The managing editor was different from the previous one, and, despite my email address was right and the name of the other guest editor was correct, they refer to me as Dr. Phillips, which is not me.

In brief, the sensation that this experience left in me is that MDPI is always seeking for guest editors to promote and spread their potential to receive submission, but they absolutely do not care about the scientific opinions of such guest editors. They use them (at least used me) only as a tool to reach the widest possible audience while performing the review process in a completely automatic and questionable manner, by contacting a number of researchers with low reputation just to ensure high speed to the process.

I had a similar sensation before, as a reviewer and not as a guest editor, with Oncotarget. Everybody knows how the story ended.

In summary, while I am quite sure that MDPI publishes both high- and low-quality manuscripts, the editorial process and the approach of the journal are highly questionable. Thus, I have never (and will never) submitted the manuscripts in which I appear as corresponding author to them. I also discourage my colleagues to do so.

There’s plenty of journals with similar IF that do not request money to publish, at least in my field (biology/medicine). Even though such journals (and publishers) gain money through subscription, I personally agree with the “old” idea that less papers means more mean quality, more value to the journal, and so on. As a result, I personally take with cautions the findings from papers derived from this (and other with similar style) publisher.

Another point deserving attention might be the ratio between the number of published items and the number of correction/retraction. I do not have statistics, but if a publisher publishes a lot, then, statistically, a high number of retraction/correction should be expected. It would be of interest if MDPI has a ratio of publishing/retracting that is comparable, higher, or lower compared to other non-open access publishers. If higher (lots of publications, few retractions), I would interpret this as a non-serious follow-up by the publisher of issues raised by the scientific community. The opposite scenario would suggest that probably the review process is of low quality but the publisher takes seriously the process of making science (which is the most important point in my opinion). In the case of a ratio comparable to other publishers, it means that the overall process is OK and MDPI can go on and on in making millions for years and years without, at least, doing a serious damage to science.

I came across these MDPI papers when I was doing my PhD, and I was quite happy that they were open access. And being an “infant” in academia at that time, I was amazed by the fact that these MDPI papers have such high impact factor. But after learning of their APC, it was quite impossible for us (my supervisor) to publish in such journals. Now that I am in my “teenage” years, I am quite convinced that MDPI has some trick to be what they are today (which has been partly answered by your analysis. GOOD JOB!).

Nevertheless, I am a bit puzzled, apart from being Open Access, with such high numbers of articles published annually, how do these MDPI journals maintain their impact factors? This is just a very innocent question, but do the editors have the “power” to insert some self-citations into the articles? I hope someone can enlighten me on this.

Self-citations can be reached via Web of Science. They vary a lot. For example the top-rated MDI journal Cancers has got almost 50% of self-citations while Sensors has got around 20% and Antioxidants only around 10%.

The 2019 impact factor of Cancers after correction for self cites is still 5.492, so no Cancers does not have a 50% self citation rate. Check your sources correctly…

I am very sorry, I have mislooked to another line and taken wrong number 😦

Thank you for these analyses. Personally, I had two contradictory experiences with MPDI.

The first, I published a paper in IJERPH and the review process was clearly similar to other “traditional” journal. Except that the second round of review was quite less demanding, and that in the third round, a decision was made, even if I’m still thinking that the manuscript has some weaknesses and could have been improved again.

This lead me to think that this fast decision at the end of the process (around 30 days) have been in part influenced by the need to “reduce time to acceptance” and to fit in the norms.